I'm receiving weird values for acousticness and instrumentalness for harpsichord recordings.

The two harpsichord tracks :

Trevor Pinnock

Luc Beauséjour

From the API:

Acousticness

A confidence measure from 0.0 to 1.0 of whether the track is acoustic. 1.0 represents high confidence the track is acoustic.

Instrumentalness

Predicts whether a track contains no vocals. “Ooh” and “aah” sounds are treated as instrumental in this context. Rap or spoken word tracks are clearly “vocal”. The closer the instrumentalness value is to 1.0, the greater likelihood the track contains no vocal content. Values above 0.5 are intended to represent instrumental tracks, but confidence is higher as the value approaches 1.0.

Getting the playlist track features

All code in R.

bach_prelude_playlist_features = get_playlist_audio_features("", "4yNYY3xmNhPTDrfFc0qG9b")

bach_prelude_playlist_features = bach_prelude_playlist_features %>%

mutate(track.duration_min = track.duration_ms/1000/60) # Create duration by minute.Plot the acousticness

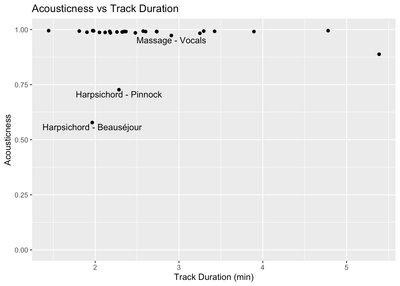

acoustic_v_duration = ggplot(bach_prelude_playlist_features, aes(

x=track.duration_min, y=acousticness,

)) + geom_point() + xlab("Track Duration (min)") + ylab("Acousticness") +

geom_text(

label=bach_harpsichord_labels, nudge_x=0, nudge_y=-0.02

) + ggtitle("Acousticness vs Track Duration") + ylim(0, 1)

acoustic_v_duration

Plot the instrumentalness

instrumental_v_duration = ggplot(bach_prelude_playlist_features, aes(

x=track.duration_min, y=instrumentalness,

)) + geom_point() + xlab("Track Duration (min)") + ylab("Instrumentalness") +

geom_text(

label=bach_harpsichord_labels, nudge_x=0, nudge_y=-0.02

) + ggtitle("Instrumentalness vs Track Duration") + ylim(0, 1)

instrumental_v_duration

Why does the SpotifyAPI think that Trevor Pinnock’s recording sounds like it has vocals?! A score of 0.1 means that the API is quite certain that the there is vocal content. It’s clear that Harpsichords have a rich harmonic content. Is it because of fancy microphone techniques that the low strings of his harpsichord resonate in a way different than that of Beauséjour’s?